Blog Entries

The Cloud Resume Challenge - Part 1

I thought the Cloud Resume Challenge sounded pretty cool. Here is the beginning of my journey.

Category: Blog Entry

The cloud has always been so fascinating to me. Servers are so powerful and incredible that hundreds of people can use them at the same time. Data Centers house so much raw compute power that even AI is becoming possible. This challenge sounded like a fun way to help me learn more about it.

The Cloud Resume Challenge - Part 2

The first part of the Challenge is building a static website and hosting it in the Cloud!

Category: Blog Entry

I've used HTML/CSS before, I've worked for a website startup before so I was familiar with the basics of a simple static website and hosting. But I have not really done anything quite like this before.

The Cloud Resume Challenge - Part 3

With the static website pretty much ready to go, there was only one place left to go. The Cloud.

Category: Blog Entry

Ahead of me are technologies and software that I was not familiar with. From here on out it would be a lot more learning than before.

Adventures in self-hosted AI - Part 1

I really like AI. I really like the cloud. But I really don't want to use sombody else's AI on somebody else's cloud.

Category: Blog Entry

Large Language Models and txt2img generators are both fascinating and fun! However I do not want to rely on the cloud to use either of these. I don't want to pay for the privilege of using compute power which I already have at home. Luckily there are open source options for both of these which I can run on my own hardware in my own house.

Basic Computer Troubleshooting Overview

A quick guide to help you fix a misbehaving computer

Category: Educational Article

When your computer just isn't doing what you are telling it to or isn't behaving the way you expect. Getting everything back to the way it's supposed to be can be as simple as closing and reopening the problem program.

Graphics Cards through the ages. Part 1: Nvidia

I decided to write up a history of Gaming Graphics Cards. In this episode we explore the history of Nvidia and the GPUs that they have made.

Category: Educational Article

The Modern Gaming Graphics card we all know and love today does so much for us. It can help perform blockchain validation, it can run Machine Learning models, and it can even occasionally play video games! It all started with the Unified Shader made for playing 3d games on the PC.

Graphics Cards through the ages. Part 2: AMD

The story isn't done yet, AMD was in the game of making Unified Shader GPUs right from the beginning.

Category: Educational Article

AMD basically invented the Unified Shader GPU for the Xbox 360 Just like they invented the 64-bit CPU. But they weren't the first to bring it to the PC platform. This would prove to be a pattern as they lag behind their competitor Nvidia when it comes to getting new features to market.

Image Galleries

PCs from my Past

I have built a lot of computers over the ages. Here are a few.

Computers at Work

I've worked with all sorts of computers for most of my professional life.

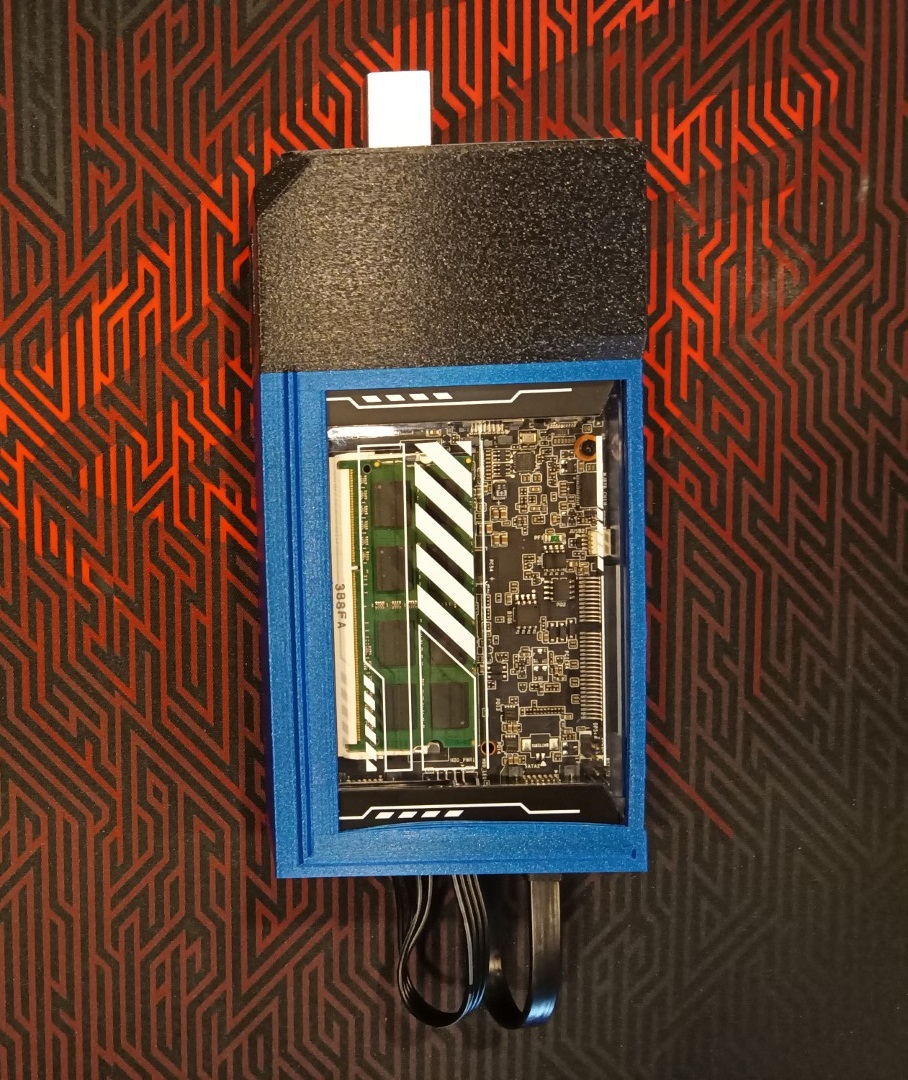

My First Cyberdeck

I found inspiration for my first cyberdeck from Cyberpunk 2077.

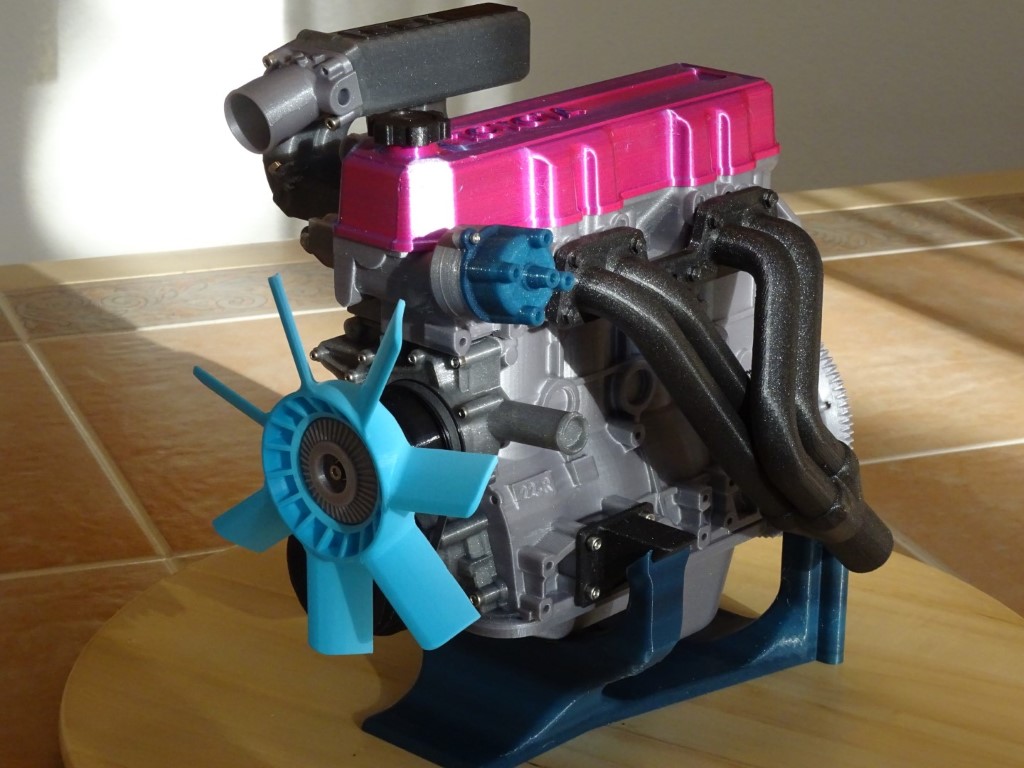

Scale model of the 22RE

I 3d printed a functional scale model of the Toyota 22RE engine

Adventures in Radio Control aircraft!

Flying RC aircraft is one of my favorite things to do!

Scale model of Metal Gear Rex

FDM finally allowed me to create a model of my favorite mech in the world. Metal Gear Rex!

Electro-plating a 3d print

I electro-plated copper onto this 3d print of a Dragon Skull!

My very first planted Aquarium!

I like aquariums. I like it when they have lots of life in them. Including plant life