This is an overview of all modern Graphics Processing Units (GPU). Since there are so many we will split them up starting with Nvidia.

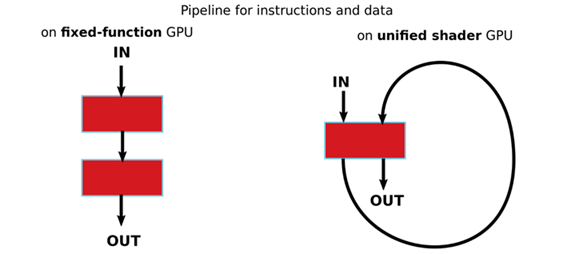

Birth of the Modern GPUThe modern GPU that we are familiar with today and the only kind of GPU that AMD, Intel, or Nvidia makes today is the kind with a Unified Shader architecture. A shader is how the computer calculates the lighting and color of an object simulated in a 3D scene. Before the unified shader paradigm, graphics cards would have separate dedicated hardware for each of the multiple steps of processing. The first step is Vertex Shading, calculating the lighting and color of the model of the object itself, the second step is the pixel shader where the color of each pixel on the computers display is calculated. A Unified shader is a processor that is capable of calculating both of these steps rather than only just one.

Using the separate shader hardware made the GPU less flexible and sometimes slower than intended if one set of processors was stressed more than the other set, leaving them idle and waiting for the other set. This led to the development of the Unified Shader processors. All purpose processors that could handle all steps of the shader process. This made GPUs more easily expandable and more flexible, able to handle a wider array of tasks at the same time. The Unified Shader was first introduced in the Xbox 360 by ATI in November 2005, and then was quickly adopted by Nvidia for the desktop GPU lines, they even managed to beat ATI to market with this feature. Unified Shaders have been universally used on all desktop GPUs since then.

Nvidia through the Generations

2006 - Tesla

Nvidia through the Generations

2006 - Tesla

Nvidia's first Unified Shader GPU was the GeForce 8800 GTX in November 2006 at $599. This GPU was built using a processor architecture that Nvidia called Tesla. This architecture introduced a great deal of features that Nvidia still uses on their cards to this day, such as CUDA acceleration that allowed general purpose computing to happen on the GPU. Tesla was also Nvidia's first architecture to feature DirectX 10 support.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce 8800 GTX | G80 | 90nm Tesla | 681 Million | 768MB GDDR3 | PCI-E 1.0 | 145 Watts | 657 |

2007 saw only a refined version of the 8800 GTX called the 8800 GT. Released in October 2007 for $249, it was a refined version of Tesla using the brand new 65nm process node. Along with some other cost saving improvements, it was able to match or closely follow the 8800 GTX for less than half the price. This is also where Nvidia made the switch to PCI-e 2.0.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce 8800 GT | G92 | 65nm Tesla | 754 Million | 1GB GDDR3 | PCI-E 2.0 | 125 Watts | 514 |

The next generation of Nvidia GPUs was a refined version of the same Tesla architecture using a 65nm process. The 9800 GTX released in April of 2008 for $350.2008 sure was a wild year for Nvidia. Even though they had just launched the 9800 GTX, they introduced yet another flagship card with a new naming scheme just one month later! The GTX 280 was released Jun 2008 for $650. It used the same 65nm Tesla architecture as the 9800 GTX, but with a lot more transistors this time!Just one month after that, they followed up with the 9800 GTX+ that used a brand new 55nm process and double the VRAM in July 2008 for $229.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce 9800 GTX | G92 | 65nm Tesla | 754 Million | 512MB GDDR3 | PCI-E 2.0 | 140 Watts | 769 |

| GeForce 9800 GTX+ | G92b | 55nm Tesla | 754 Million | 1GB GDDR3 | PCI-E 2.0 | 141 Watts | 746 |

| GeForce GTX 280 | GT200 | 65nm Tesla | 1.4 Billion | 1GB GDDR3 | PCI-E 2.0 | 236 Watts | 1040 |

In 2010, Nvidia introduced a new architecture called Fermi with support for DirectX 11, starting with the GTX 480 in March 2010 for $499. The Fermi line is where Nvidia first started using the long lived GDDR5 for the VRAM. Fermi was also infamously power hungry and ran very hot. So hot it required a huge metal housing that could reach and uncomfortable to touch 120 degrees F, and that is just he outside shroud around the heatsink! Later in November 2010, the GTX 580 was released for $499 as well! This card came with some bonus refinements like more VRAM, and higher clock speeds, but most importantly lower power consumption and temperatures. Some of these improvements were achieved by clock throttling down when the card recognizes power use that is too high, in order to keep temperatures under control.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX 480 | GF100 | 40nm Fermi | 3 Billion | 1.5GB GDDR5 | PCI-E 2.0 | 250 Watts | 3595 |

| GeForce GTX 580 | GF110 | 40nm Fermi | 3 Billion | 4GB GDDR5 | PCI-E 2.0 | 244 Watts | 4617 |

The GTX 680 was released in March 2012 for $500 using a new architecture called Kepler. Nvidia made the move to PCI-e 3.0 with this card. On the card itself were some big improvements, like a redesigned memory controller that pushed the GDDR5 memory even faster. So much faster that Nvidia could use a cheaper, smaller memory bus and still gain more performance. A hardware H264 encoder was added that helped with capturing gameplay video and streaming gameplay over the Internet. G-sync also made it's first appearance this year. Nvidia also did something very interesting this year. Previously the top tier GTX x80 flagship models were all Gx100 codename models. This year the GTX 680 is the smaller GK104. The Gx104 models had previously been the GTX x60 mid range cards…

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX 680 | GK104 | 28nm Kepler | 3.5 Billion | 4GB GDDR5 | PCI-E 3.0 | 195 Watts | 5498 |

Nvidia took the opportunity to release BIG versions of Kepler this year, instead of the normal smaller and more refined versions. In February 2013, the GTX Titan appeared for $999, which was basically a huge version of the GTX 680 with a massive 6 GB VRAM. It was Nvidia saying that they own the pinnacle of single GPU gaming, graphics, and compute performance and they gave it a name to match. Another Big Kepler came again in May 2013 in the form of the GTX 780 for $499, and again in November 2013 in the form of the GTX 780 Ti for $699. Twice in one year, Nvidia created a new upper tier for the biggest, baddest GPU on the market with the Titan, and then the Ti models. That being said, they were all practically the same Big Kepler… But with differences in clock and memory speeds.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX Titan | GK110 | 28nm Kepler | 7 Billion | 6GB GDDR5 | PCI-E 3.0 | 230 Watts | 9064 |

| GeForce GTX 780 | GK110 | 28nm Kepler | 7 Billion | 3GB GDDR5 | PCI-E 3.0 | 230 Watts | 7900 |

| GeForce GTX 780 Ti | GK110 | 28nm Kepler | 7 Billion | 6GB GDDR5 | PCI-E 3.0 | 230 Watts | 9341 |

The next architecture introduced by Nvidia was the Maxwell architecture, but it wasn't immediately used for the next Generation of GPUs. First, Maxwell 1.0 was used in the lower end GTX 750 Ti (codenamed GM107) in February 2014. It can be considered a practice GPU for this new architecture, as it was the first time that a GPU included an on board ARM CPU of its very own. This allowed it to be more independent of the system's primary CPU, leaving more CPU resources for the games to take advantage of. A more refined version of the architecture called Maxwell 2.0 was introduced with the GTX 980 (codenamed GM204), which released in September 2014 for $549. A big improvement that Maxwell had over Kepler was power efficiency with the top end card, the GTX 980, being under 200 Watts!

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX 750 Ti | GM107 | 28nm Maxwell 1.0 | 1.8 Billion | 2GB GDDR5 | PCI-E 3.0 | 60 Watts | 3822 |

| GeForce GTX 980 | GM204 | 28nm Kepler | 5.2 Billion | 4GB GDDR5 | PCI-E 3.0 | 165 Watts | 10832 |

Nvidia continued the Titan line with its own Maxwell version, the Titan X, in March 2015 for the traditional $999. In June 2015, Nvidia released the GTX 980 Ti for $649.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX Titan X | GM200 | 28nm Maxwell 2.0 | 8 Billion | 12GB GDDR5 | PCI-E 3.0 | 250 Watts | 12642 |

| GeForce GTX 980 Ti | GM200 | 28nm Maxwell 2.0 | 8 Billion | 6GB GDDR5 | PCI-E 3.0 | 250 Watts | 13702 |

A new architecture came to us in the form of Pascal, with the GTX 1080 having released in May 2016 for $599, along with the traditional Titan version named the Titan X again in August 2016 for $1200 this time. But don't worry, the 2015 version was named the GTX Titan X. This time it is just the Titan X. No confusion here… Pascal kept the very power efficient legacy of Maxwell, and introduced the new refined version of GDDR5 called GDDR5X, which allowed even higher memory clock speed. Along with some VR focused features, Pascal also focused on compute performance to help sales in the data-center and supercomputer markets.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX 1080 | GP104 | 16nm Pascal | 7.2 Billion | 8GB GDDR5X | PCI-E 3.0 | 180 Watts | 14559 |

| GeForce GTX Titan X | GP102 | 16nm Pascal | 12 Billion | 12GB GDDR5X | PCI-E 3.0 | 250 Watts | 13659 |

In 2017, both the GTX 1080 and the Titan X got an upgrade. The GeForce GTX 1080 Ti released in March 2017 for $699. Then Nvidia seemed to suddenly realize that the Titan X product naming might be a bit confusing, so they made a new separate Titan. The GeForce Titan Xp released in April 2017 for $1200. A new architecture made a brief appearance this year as well. The Volta architecture appeared in the form of the GeForce Titan V in December 2017 for $2999. It only appeared in Titan cards, and was focused almost exclusively on datacenter compute and supercomputer usage. It also happened to use the new HBM2 memory. It seemed to be a practice card for testing new features and new memory types.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce GTX 1080 Ti | GP102 | 16nm Pascal | 12 Billion | 11GB GDDR5X | PCI-E 3.0 | 250 Watts | 17349 |

| GeForce GTX Titan Xp | GP102 | 16nm Pascal | 12 Billion | 12GB GDDR5X | PCI-E 3.0 | 250 Watts | 16634 |

| GeForce GTX Titan V | GV100 | 12nm Volta | 21.1 Billion | 12GB HBM2 | PCI-E 3.0 | 250 Watts | 17883 |

2018 brought a new architecture called Turing with some radical changes including a new naming scheme, the brand new GDDR6 memory, and what Nvidia hoped would be a killer feature. Nvidia introduced the RT processing cores and claimed that they were finally ready to perform real- time ray-tracing lighting in games! They were so excited about this feature, that they changed the traditional GTX prefix to RTX, even though this was very close to the Radeon prefix of RX! The RTX 2080 launched in September 2018 for $799. Breaking tradition yet, again the RTX 2080 Ti was also released in September 2018 for $1199. The Titan RTX was released in December 2018 for $2499.

| Model | Codename | Architecture | Transistor Count | Video Memory | PCI-E Interface | TDP | Passmark Score |

| GeForce RTX 2080 Ti | placeholder | Xnm Turing | X Billion | XGB GDDR6 | PCI-E 3.0 | X Watts | X |

| GeForce Tian RTX | Placehlder | Xnm Turing | X Billion | XGB GDDR6 | PCI-E 3.0 | X Watts | X |